When Cloud Sounds Like Cheaper Hosting

Your company has been selling vertical software for 15 years. You have 50 employees, steady revenue, happy customers ...

8 min read

10.03.2026, By Stephan Schwab

Methodologies start as tools. In captured organizations they become loyalty tests: technical disagreement is treated as disloyalty, governance turns into narrative control, and evidence gets rewritten until the slide deck looks like truth. This is how competent engineers end up punished for reality, vendors get squeezed into validating fiction, and the cost shows up as moral injury long before it shows up in delivery metrics. The way out is not better rhetoric; it is boundaries and an evidence trail that survives the next rewrite.

We have all seen it happen. A tool selected to solve a specific problem metastasizes into an ideology. What starts as a “hypothesis for better delivery” hardens into a party line that cannot be questioned without questioning the moral character of the dissenter.

The shift is subtle at first, but the mechanics are brutally predictable. It ceases to be about whether the software works and becomes entirely about whether the compliance theatre was performed convincingly.

You don’t need a degree in organizational psychology to spot this. You just need to look at who gets promoted and who gets fired.

The first sign is that the methodology shifts from being a hypothesis to being an identity marker. You are either “with us” (and the methodology) or you are “one of them” (the legacy thinkers, the resisters). Disagreement is no longer processed as technical feedback; it is treated as opposition.

Technical reality — limitations of code, constraints of physics, lack of capacity — is reframed entirely. It is never that the plan is impossible. It is always that you have “quality issues,” “adoption problems,” or the dreaded “wrong mindset.”

Authority moves upward to the Narrative Owners (who produce nothing but slides), while accountability moves downward to the execution teams (who get blamed when the plan hits reality).

When things inevitably go wrong, decisions are retroactively rewritten. Agency is laundered. Failures are misattributed to shield ownership or the method itself. Evidence that contradicts the official story is delegitimized as “assumptions,” “negativity,” or “lack of alignment.”

We see this manifest in specific, brutal ways for different actors in the system:

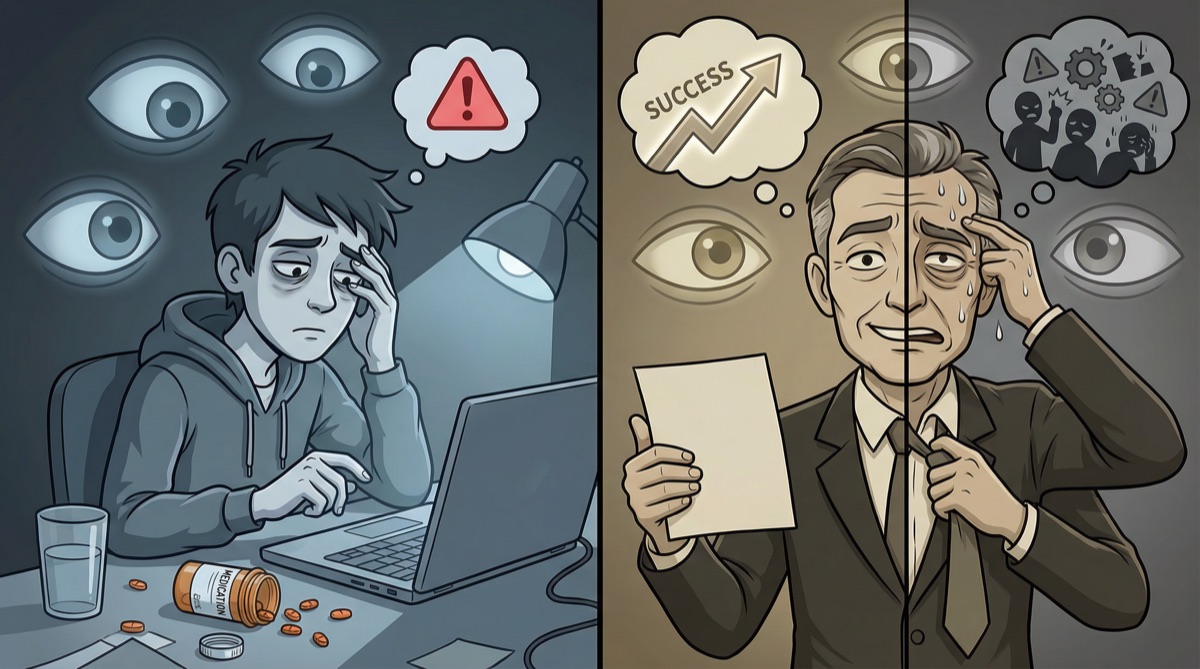

The Employee: Punished for Competence The engineer who points out that the architecture cannot support the methodology’s promises is not thanked for preventing a disaster. They are labeled “toxic,” “negative,” or “resistant to change.” They are systematically sidelined, put on performance improvement plans for “behavioral issues” (which really means “refusal to participate in the fantasy”), or fired.

The Vendor: Held Hostage by Narrative Commercial relationships become weaponized. If a vendor attempts to signal that the project is off track, they are treated as an enemy of the state. Invoices get held up for “administrative reasons,” scope is maliciously reinterpreted, or they are subjected to endless governance meetings. The message is clear: validate our delusion, or we will make your life impossible. The vendor who capitulates and lies about “success” gets paid; the one who insists on reality gets starved.

The Political Parallel For engineers, this is baffling. We are trained to believe that if we prove something is broken, it will be fixed. But these systems function like fracturing political parties, not engineering teams. Factions fight to push each other out, not to improve the product, but to capture territory. It is never about facts. It is never about the software. It is entirely about power. If you bring data to this conflict, you are bringing a spreadsheet to a knife fight.

Learning stops. Narrative stabilization becomes the only goal.

Why do otherwise rational professionals behave like ideological enforcers? The answer lies in identity fusion. The methodology becomes part of the personal and organizational self-image. To critique the method is to launch a personal attack on its sponsors.

Cognitive dissonance avoidance runs the show. Admitting that the promise and the reality are misaligned would require painful reversals and apologies. It is far easier to deny reality or reframe it than to admit you spent five million dollars on something that does not deliver.

Systems engage in an “authority preservation reflex.” They protect perceived authority before they protect truth, because authority is what enables coordination (or at least the appearance of it). The method becomes moralized. It is not just a way; it is the right way. Disagreement becomes a values violation.

We also see asymmetric risk perception. External methodology authors and paid advocates can disengage and move to the next client; owners, senior executives, and board members have to live with the outcome. This skews whose reality counts. When pressure mounts, complex failure is scapegoated into individual fault — “bad execution,” “bad quality” — to restore order quickly without examining the systemic flaw.

Social proof hardens the cement. Conferences, certifications, and public alignment raise the exit costs. If you have built your career on being the Certified Expert of the Method, or on being the pilot and showcase organization for it, you cannot afford to notice that the Method is naked. Threat response overrides learning response. When survival feels at risk, systems choose control and closure over exploration.

The Enforcer’s Reward Those who actively rewrite history or attack dissenters are not just protecting their jobs; they are receiving a specific psychological payoff. Technical reality creates anxiety — a tear in the fabric of their constructed world. Silencing the outlier resolves that anxiety. It feels like “fixing” the problem. By removing the signal, they believe they have removed the noise.

Furthermore, defining truth is the ultimate act of power. Successfully asserting that “up is down” and having the organization nod in agreement validates their status. If facts mattered, anyone could be right. But if only the sanctioned story matters, then only the gatekeepers have power.

Will It Ever Stop? No. Not internally. The system has no braking mechanism. As long as external resources (investors, profitable legacy products) subsidize the dysfunction, the behavior accelerates. It only stops when the host organism dies.

The results are structurally inevitable. Technical capability erodes because the people who care about technical capability have been driven out. Honest feedback disappears because it is dangerous.

Attrition accelerates among the competent staff who actually know how to build things. Delivery slows to a crawl while process artifacts — charts, reports, maturity models — increase exponentially. The methodology survives; the product degrades.

And when the collapse comes, it is rarely dramatic. It is not an explosion. It is a quiet, internal rotting. The organization continues to exist, but it has lost the ability to do anything other than sustain its own compliance machinery.

For the craftsman who does not understand that they are in a political theater, the cost is often physical. Because they believe that facts matter, they interpret the rejection of facts as a failure of their own communication or effort.

They work harder. They stay later. They write longer documents explaining why the bridge will collapse. And when the bridge collapses and they are blamed for not being “positive enough,” the cognitive dissonance turns inward.

This is not just “stress” or “burnout.” It is a fundamental violation of professional integrity, often registering in the body as moral injury. This manifests as chronic insomnia, anxiety disorders that mimic heart attacks, autoimmune flare-ups, and a crushing, pervasive depression. They are exhausting themselves trying to use logic to cure an ideology, and because logic has no purchase there, the effort hollows them out from the inside.

If you find yourself inside such a system, understand that direct confrontation is often treated as a threat. The system will defend the narrative before it defends reality.

1. Decouple your identity

Your job is to build working software with integrity. Do not let the party line consume your self-worth. Meet formal expectations without letting them replace engineering. Keep your craft sharp and your boundaries clear.

2. Create a record of reality

Methodologies thrive in the absence of data. Counter them with evidence. The same mechanism is described in Bridging the Great Divide: replace opinions with observed facts. Tools like Caimito Navigator are not task trackers; we built it out of painful experience with this exact trap so we can run structured Developer Advocate engagements without getting absorbed into the narrative. It captures lightweight daily observations and synthesizes them into patterns of friction, recurring blockers, and decision impact. Weekly reports turn that into management-ready recommendations and conclusions, protecting the engagement and giving affected employees both a shared record they can point to and a structured voice that can reach senior management. A deeper take on incentives and accountability lives in Management Frameworks, Incentives, and Accountability.

In a healthy organization, this becomes action. When management is hijacked by an ideological narrative, it rarely changes the minds of the committed. But it does create a shared, reviewable record of reality. Observers can read it, compare weeks, and see whether decisions follow evidence or the party line.

Navigator also includes an AI chat interface for private conversations with the evidence. That does not fix a toxic system. It can help an affected person think clearly, test assumptions, and decide what they are willing to accept.

3. Make the work legible (without becoming a shield)

You do not have to pretend or rename normal work. You do have to be understood. Describe technical work in plain outcomes: risk reduction, faster feedback, fewer incidents, smoother delivery.

Do not let clarity turn you into a credibility shield. If the system wants your competence mainly to legitimize decisions you do not support, state the boundary. Stay factual. Do not sign off on claims you do not believe. When necessary, insist that trade-offs and risks are written down with clear ownership.

4. Decide consciously

When the demand for narrative adherence permanently exceeds the demand for working software, the technical debt is no longer in the code. It is in the culture.

You cannot refactor culture from the inside on willpower alone. If you are not allowed to practice basic engineering discipline like TDD, change your employer. That is not drama. That is hygiene.

If it is less absolute than that, decide what you will try, what you will refuse, and what your conditions are for staying engaged.

Let's talk about your real situation. Want to accelerate delivery, remove technical blockers, or validate whether an idea deserves more investment? I listen to your context and give 1-2 practical recommendations. No pitch, no obligation. Confidential and direct.

Need help? Practical advice, no pitch.

Let's Work Together