The Product Manager Is Dead. Long Live the Product Developer.

The person who walks into the room with Figma mockups and says 'build this' has run out of runway. AI collapsed the d...

10 min read

07.03.2026, By Stephan Schwab

When your business runs on a decade-old application with VBA customizations that nobody fully understands, modernization is not optional — it is survival. Success requires using AI for knowledge extraction from legacy VBA, building Java test suites for validation, and applying the Swiss cheese model to ensure your new implementation produces exactly the same results. Because almost right means business failure.

Somewhere in your organization, there is a business application. It has been there for fifteen years. It started as off-the-shelf software, but the vendor exposed VBA for customization, and your organization took advantage of it. What began as simple automation grew into business-critical logic, and now runs pricing decisions worth millions. The person who wrote these customizations retired in 2019. The documentation is the code itself — if you can call it documentation.

This is not a hypothetical scenario. I see similar situations regularly. The VBA customizations that calculate insurance premiums in a policy management system. The accounting software with VBA scripts that reconcile international payments. The CRM platform where VBA automates complex lead scoring. All business-critical. All irreplaceable. All terrifying.

When organizations finally decide to modernize these systems, they face a fundamental problem: nobody knows exactly what the system does. They know what it is supposed to do. They know what buttons to press. But the actual logic — the edge cases, the special handling, the quirks that accumulated over years of patches — exists only in the code.

Most legacy modernization projects fail for one simple reason: the new system does not produce the same results as the old one.

This sounds obvious. Of course the new system should match the old one. But matching is harder than it appears. A pricing calculation that differs by 0.3% might seem acceptable until you realize that 0.3% on ten thousand transactions per day compounds into significant revenue discrepancy. Finance notices. Auditors ask questions. Trust evaporates.

The problem is not technical capability. Modern developers can build elegant systems. The problem is knowledge extraction. How do you capture what the legacy system actually does, including all the undocumented behaviors that have become business requirements simply because they have been happening for years?

This is where AI becomes genuinely useful — not as a replacement for developers, but as an archaeological assistant that never gets bored reading terrible code.

VBA written in 2008 by a finance professional who learned programming from trial and error has a particular character. Variables named temp2, finalFinal, and thisOneWorks. Functions that span 400 lines. Copy-pasted blocks with subtle variations. Comments that say “don’t touch this” without explaining why.

An AI assistant can read through this code and extract meaning that would take a human developer days to piece together. Feed it a 2000-line VBA module and ask: “What business rules are encoded in this function?” The AI will identify the calculation steps, note the conditional branches, and describe the logic in plain language.

This workflow is particularly effective in IDEs like VS Code where you can maintain a running dialogue with the AI. You can ask it to analyze specific code sections and document its findings into a separate markdown file — creating a structured investigation log. This file essentially becomes your navigation chart, highlighting ambiguous logic, hardcoded constants, or strange dependencies that need further investigation.

But here is the critical point: the AI’s interpretation is a hypothesis, not a verified fact. The AI might misunderstand obscure VBA syntax. It might miss context that only makes sense if you know the business domain. It might confidently explain logic that the original author got wrong, and that wrongness has become the expected behavior.

You cannot trust the AI’s output directly. You need validation.

The solution is to build a comprehensive test suite before writing a single line of replacement code. Not tests that verify your understanding of what the system should do — tests that capture what the system actually does.

This requires running the legacy system with known inputs and recording the outputs. Every scenario. Every edge case you can identify. Every combination of parameters that the business uses in practice.

@ParameterizedTest

@MethodSource("legacyCalculationTestCases")

void newCalculation_MatchesLegacyOutput(

CalculationInput input,

LegacyOutput expectedOutput) {

var result = newCalculationEngine.calculate(input);

assertEquals(expectedOutput.getPremium(), result.getPremium(), 0.0001);

assertEquals(expectedOutput.getTax(), result.getTax(), 0.0001);

assertEquals(expectedOutput.getFees(), result.getFees(), 0.0001);

}

AI tools accelerate this harness construction. Instead of manually determining which inputs cover all code paths, feed the VBA logic to an AI assistant. Ask it to identify boundary values and conditional triggers. It will spot that line 403 checks for transactions over $10,000 and suggest a test case for $10,001. It can also generate the Java data structures (DTOs) directly from VBA Type definitions, handling the tedious syntax translation instantly.

We can go further. Under human supervision in a constant dialog, it can build the tests in a TDD fashion. Instead of attempting to understand the entire system at once, you use the AI to incrementally discover rules and lock them down with tests. This interactive process keeps the human in control — verifying the logic — while the AI handles the heavy lifting of syntax and structure.

Sometimes, executing the legacy VBA is difficult due to environmental dependencies. In these situations, the AI can analyze the logic structure to generate sensible synthetic test data. It identifies the necessary input combinations to trigger specific paths, allowing you to build comprehensive test scenarios even when the original runtime environment is fragile.

The test cases come from the legacy system. Run the VBA with these inputs, capture those outputs, store them as your validation baseline. Now you have an oracle — a source of truth that defines correct behavior not by specification but by observation.

Essentially, we are building a model of the old system at this point. We are not yet designing the new architecture; we are creating a high-fidelity behavior map of the legacy application. This executable model becomes the requirements specification that no written document could ever equal.

This approach has a name in testing circles: characterization tests, or sometimes golden master tests. You are characterizing existing behavior, not specifying new behavior. The legacy system is the specification.

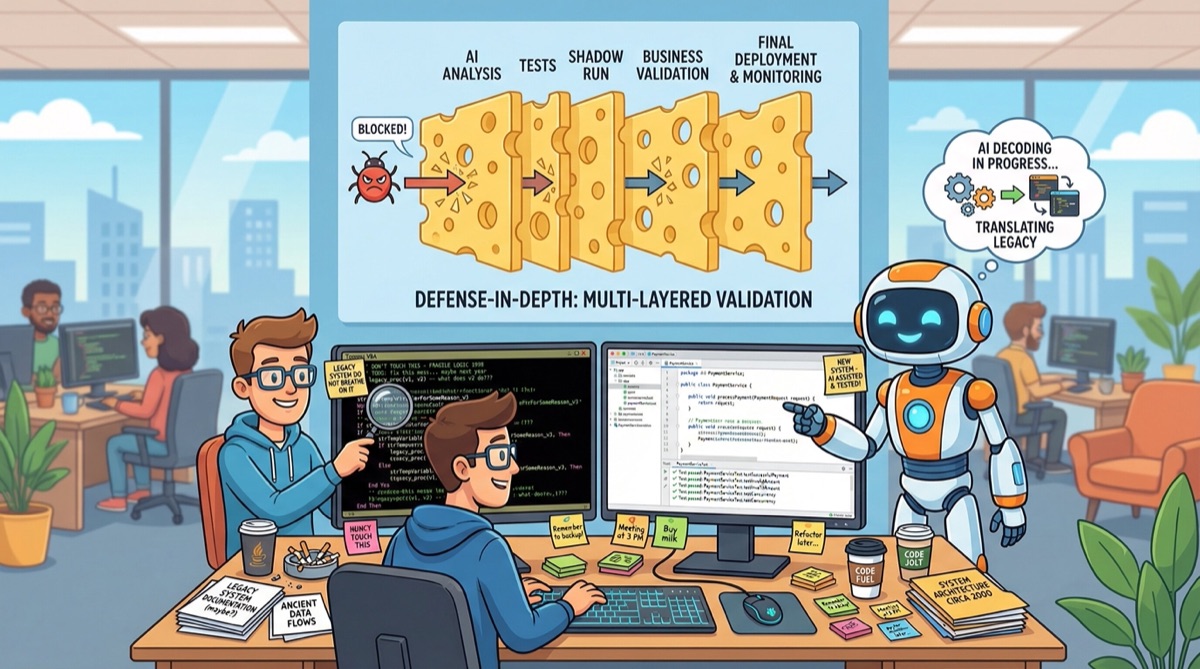

Here is where the Swiss cheese model becomes essential. James Reason developed this model to explain how accidents happen in complex systems. The idea is simple: every defensive layer has holes, like slices of Swiss cheese. An accident occurs when the holes align, allowing a hazard to pass through all layers.

This requires a psychological shift. Humans in general are trained to seek perfection — the correct answer, the bug-free function, the complete specification. Complexity and ambiguity feel like failures. But in legacy modernization, seeking a single “perfect” validation method is a trap. It leads to analysis paralysis.

We must accept that every tool and every layer will be flawed. The AI will misinterpret some logic. The test data will miss a scenario. The shadow run will fail to catch a seasonal edge case. Accepting this imperfection is not defeatism; it is the statistical reality of complex systems. By acknowledging that no single layer is trustworthy, we are forced to build resilience through redundancy.

In legacy modernization, the hazard is incorrect behavior slipping into production. Each validation layer catches some problems but misses others. The solution is not to find the perfect layer — it is to stack multiple imperfect layers so their holes do not align.

Layer 1: AI-assisted code analysis. Have the AI explain what each VBA function does. Review its interpretation. Ask follow-up questions. Build a mental model of the system.

Layer 2: Input/output capture. Extract real calculation examples from the legacy system. Not synthetic test cases — actual historical inputs and their corresponding outputs.

Layer 3: Characterization tests. Build the Java test suite that runs your new implementation against the captured baselines. Every failing test reveals a behavioral difference.

Layer 4: Shadow running. Deploy the new system alongside the old one. Run real transactions through both. Compare outputs continuously. Alert on any discrepancy.

Layer 5: Staged rollout with rollback. Process a subset of live transactions with the new system. Monitor for discrepancies. Increase the volume only when confidence is high.

Layer 6: Business validation. Have domain experts review sample outputs. Do the numbers make sense? Do edge cases behave correctly? Trust their intuition — they have been using this system for years.

No single layer is sufficient. AI interpretation might miss subtleties. Characterization tests might not cover rare edge cases. Shadow running might not exercise every code path. But stack all six layers, and the probability of a significant defect reaching production drops dramatically.

There is a secondary benefit to this approach that often proves more valuable than the modernization itself: explicit knowledge.

The legacy VBA encoded business rules implicitly. Nobody could articulate these rules without reading the code. Now you have a test suite with hundreds of documented examples. The AI-generated explanations, reviewed and corrected, become prose documentation. The characterization tests become executable specifications.

When the next developer joins the team, they do not need to reverse-engineer a VBA module. They can read the tests. They can see exactly what inputs produce what outputs. They can understand the business rules through examples rather than through archaeological excavation.

If you are facing a legacy VBA modernization project, here is where to start:

Inventory and assess. List every VBA module, form, and macro. Note which ones are business-critical versus convenience utilities. Identify the highest-risk components — those that handle money, compliance, or customer data.

Extract knowledge with AI. Feed the critical VBA code to an AI assistant. Ask it to explain the business logic. Use the dialog in your IDE to document findings in a file that will guide further investigation. Mark areas of uncertainty.

Capture the oracle. Run the legacy system with representative inputs. Record every output. Build your golden master dataset.

Build the test harness. Create the Java test suite. Verify that running the captured inputs through the legacy system produces the captured outputs. This sounds circular, but it validates your capture process.

Incremental replacement. Rewrite one function at a time. Run the characterization tests after each change. Mismatches are defects until proven otherwise.

The key is patience. Rushing this process invites the very failures that make legacy modernization notorious. Each layer of validation takes time to implement. That time is an investment in confidence.

Organizations often delay legacy modernization because the risk feels high. What if the new system breaks something? What if we lose functionality? What if customers notice problems?

These concerns are valid. But they must be weighed against the risk of doing nothing. The VBA developer who maintains the system is retiring. The vendor’s platform is approaching end-of-support. The audit requirements are becoming stricter. The business is growing faster than the legacy customizations can handle.

The risk of modernization is visible and manageable. The risk of continued stagnation is silent until it becomes catastrophic — the day the application corrupts, the day the only person who understands it leaves, the day an auditor asks for documentation that does not exist.

Modernizing legacy VBA is not a technology problem. It is a knowledge extraction problem with a validation requirement that demands defense in depth.

AI accelerates the extraction phase. Java tests provide the validation framework. The Swiss cheese model ensures that no single failure mode can compromise the outcome.

The alternative — rewriting based on assumptions about what the system should do — is how modernization projects fail. Same results is the only acceptable standard when business continuity depends on the outcome.

The application that runs your business deserves better than hope. It deserves layers of validation so robust that confidence replaces anxiety. Start building those layers today.

Let's talk about your real situation. Want to accelerate delivery, remove technical blockers, or validate whether an idea deserves more investment? I listen to your context and give 1-2 practical recommendations. No pitch, no obligation. Confidential and direct.

Need help? Practical advice, no pitch.

Let's Work Together